Using BigQuery Dataframes with dbt python models

Introduction

In this guide, you'll learn how to set up dbt so you can use it with BigQuery Dataframes (BigFrames):

- Build scalable data transformation pipelines using dbt and Google Cloud, with SQL and Python.

- Leverage BigFrames from dbt for scalable BigQuery SQL.

In addition to the existing dataproc/pyspark based submission methods for executing python models, you can now use the BigFrames submission method to execute Python models with pandas-like and scikit-like APIs, without the need of any Spark setup or knowledge.

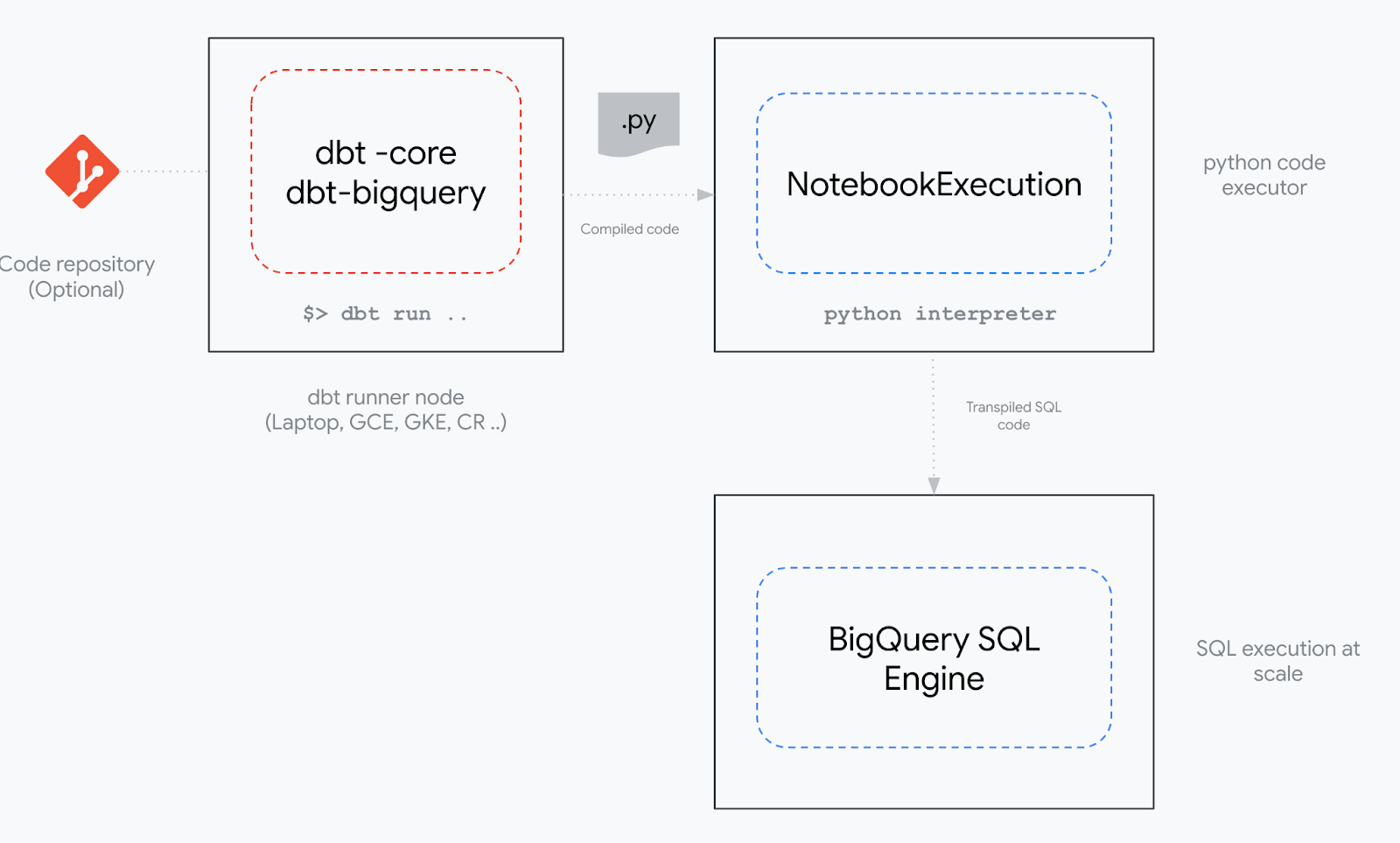

BigQuery Dataframes is an open source python package that transpiles pandas and scikit-learn code to scalable BigQuery SQL. The dbt-bigquery adapter relies on the BigQuery Studio Notebook Executor Service to run the python client side code.

Prerequisites

- A Google Cloud account

- A dbt Cloud account

- Basic to intermediate SQL and python.

- Basic understanding of dbt fundamentals. We recommend the dbt Fundamentals course.

What you'll build

Here's what you'll build in two parts:

- Google Cloud project setup

- A one-time setup to configure the Google Cloud project you’ll be working with.

- Build and Run the Python Model

- Create, configure, and execute a Python model using BigQuery DataFrames and dbt.

You will set up the environments, build scalable pipelines in dbt, and execute a python model.

Figure 1 - Implementation of the BigFrames submission method for dbt python models

Configure Google Cloud

The dbt BigFrames submission method supports both service account and OAuth credentials. You will use the service account in the following steps.

-

Create a new Google Cloud Project

a. Your new project will have the following list of APIs already enabled, including BigQuery, which is required.

b. Enable the BigQuery API which also enables the following additional APIs automatically

-

Create a service account and grant IAM permissions

This service account will be used by dbt to read and write data on BigQuery and use BigQuery Studio Notebooks.

Create the service account with IAM permissions:

#Create Service Account

gcloud iam service-accounts create dbt-bigframes-sa

#Grant BigQuery User Role

gcloud projects add-iam-policy-binding ${GOOGLE_CLOUD_PROJECT} --member=serviceAccount:dbt-bigframes-sa@${GOOGLE_CLOUD_PROJECT}.iam.gserviceaccount.com --role=roles/bigquery.user

#Grant BigQuery Data Editor role. This can be restricted at dataset level

gcloud projects add-iam-policy-binding ${GOOGLE_CLOUD_PROJECT} --member=serviceAccount:dbt-bigframes-sa@${GOOGLE_CLOUD_PROJECT}.iam.gserviceaccount.com --role=roles/bigquery.dataEditor

#Grant Service Account user

gcloud projects add-iam-policy-binding ${GOOGLE_CLOUD_PROJECT} --member=serviceAccount:dbt-bigframes-sa@${GOOGLE_CLOUD_PROJECT}.iam.gserviceaccount.com --role=roles/iam.serviceAccountUser

#Grant Colab Entperprise User

gcloud projects add-iam-policy-binding ${GOOGLE_CLOUD_PROJECT} --member=serviceAccount:dbt-bigframes-sa@${GOOGLE_CLOUD_PROJECT}.iam.gserviceaccount.com --role=roles/aiplatform.colabEnterpriseUser -

(Optional) Create a test BigQuery Dataset

Create a new BigQuery Dataset if you don't already have one:

#Create BQ dataset

bq mk --location=${REGION} echo "${GOOGLE_CLOUD_PROJECT}" | tr '-' '_'_dataset -

Create a GCS bucket to stage the python code

For temporary log and code storage, please create a GCS bucket and assign the required permissions:

#Create GCS bucket

gcloud storage buckets create gs://${GOOGLE_CLOUD_PROJECT}-bucket --location=${REGION}

#Grant Storage Admin over the bucket to your SA

gcloud storage buckets add-iam-policy-binding gs://${GOOGLE_CLOUD_PROJECT}-bucket --member=serviceAccount:dbt-bigframes-sa@${GOOGLE_CLOUD_PROJECT}.iam.gserviceaccount.com --role=roles/storage.admin -

Create a GCS bucket to hold the logs

#Create GCS bucket

gcloud storage buckets create gs://${GOOGLE_CLOUD_PROJECT}-bucket-logs --location=${REGION}

#Grant Storage Admin over the bucket to your SA

gcloud storage buckets add-iam-policy-binding gs://${GOOGLE_CLOUD_PROJECT}-bucket-logs --member=serviceAccount:dbt-bigframes-sa@${GOOGLE_CLOUD_PROJECT}.iam.gserviceaccount.com --role=roles/storage.admin

Create, configure, and execute your Python models

-

In your dbt project, create a sql model in your models directory, ending in the

.sqlfile extension. Name itmy_sql_model.sql. -

In the file, copy this sql into it.

select

1 as foo,

2 as bar -

Now create a new model file in the models directory, named

my_first_python_model.py. -

In the

my_first_python_model.pyfile, add this code:def model(dbt, session):

dbt.config(submission_method="bigframes")

bdf = dbt.ref("my_sql_model") #loading from prev step

return bdf -

Configure the BigFrames submission method by using either:

a. Project level configuration via dbt_project.yml

models:

my_dbt_project:

submission_method: bigframes

python_models:

+materialized: viewor

b. The Python code via dbt.config in the my_first_python_model.py file

def model(dbt, session):

dbt.config(submission_method="bigframes")

# rest of the python code... -

Run

dbt run -

You can view the logs in dbt logs. You can optionally view the codes and logs (including previous executions) from the Colab Enterprise Executions tab and GCS bucket from the GCP console.

-

Congrats! You just created your first two python models to run on BigFrames!